Quality is paramount to all programming work carried out at Phastar to ensure accurate reporting and analysis of clinical trials. In order to maintain our high standards, we have SOPs and Work Instructions that detail our standardised internal processes for SAS programming and QC across studies and sites.

Other resources available to staff include our checklist of hints and tips collated from over 1500 years of industry experience, “The Phastar Discipline”; a CDISC specific checklist; and we also a run full day internal training course on delivering to quality that includes a practical workshop which is given to all new starters and offered as a refresher for experienced staff.

At Phastar, our datasets and outputs are checked at many stages during their creation.

Initially, quality begins by the production programmer or statistician following the Phastar “Golden Rules”: understanding the task in hand (protocol, specifications, and industry guidelines); understanding the data to be reported; and producing good, clear and well commented SAS code ensuring no errors and warnings. At this stage, sense checks are also carried out by the production programmer by comparison with other results before releasing the deliverable for independent QC.

The next stage is independent QC, where datasets and results are checked by an independent programmer or statistician. Key success drivers in this process are (i) maintaining a high degree of independence between the production and QC programmers and (ii) having well written specifications. The production and QC programmer never view or update the other’s code which is kept separate at all times. Usually differences are due to interpretation of the data and specifications provided i.e. one programmer may have missed reading a footnote stating how a derivation should be calculated or the specifications may be interpreted in more than one way and may need further clarification.

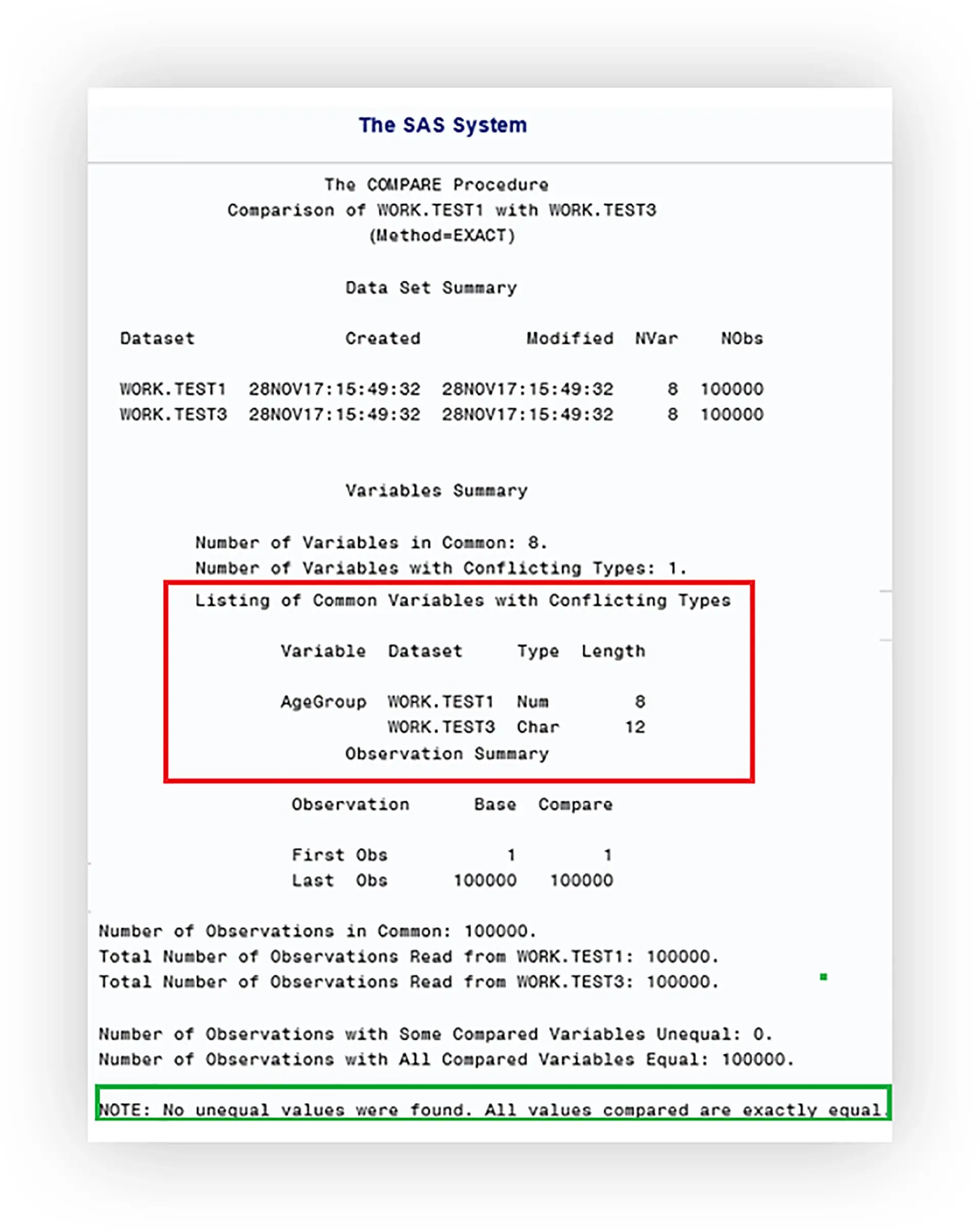

When employing the double programming method utilising a PROC COMPARE for a programmatic comparison, the output of this procedure should be properly understood as we shouldn’t just rely on an “All observations are equal” message which may mask potential issues!

The final quality check is performed by a senior statistical manager who will cross check each output with other results for sensibility and consistency e.g. checking population counts against the disposition table; utilising their experience to pick up on any nuances that can only be detected when interpreting the results as a whole.

To monitor the quality, QC is closely tracked from the study setup through to final reporting, as each dataset and table, listing and figures are tracked in a document with different stages indicated so the lead programmer can check the status of each deliverable. This gives the lead programmer a good overview of the current status of the programming progress within a project at a glance.